July 22nd, 2024

What Is Analysis of Variance (ANOVA) in Statistics?

By Alex Kuo · 10 min read

It’s considered the go-to analysis tool for experimental design, and it’s integral to scientific research. Using it, you’re able to evaluate the differences between several sample means – or averages – to determine if there’s a relationship or a statistically significant difference between them.

It’s an analysis of variance in statistics, otherwise known as an ANOVA test. Here, you’re going to learn about this type of statistical test and in what aspects of data analysis it may prove useful.

Defining Analysis of Variance (ANOVA)

ANOVA is a statistical analysis tool that allows you to determine the difference between the means of at least three groups of data. With it, you separate the total variability found in a dataset into two factors: random and systematic.

It’s essentially a calculation, which you’ll use to come up with a result of 1 or higher. The closer the calculation is to 1, the lower the variance between your groups of data.

How to Use ANOVA

ANOVA as a data analysis tool is only useful when you have a numeric response variable and you wish to see how the number of that variable differs across a range of groups. For instance, when testing diabetes medication, scientists measure the impact of experimental medicines on patient blood sugar levels. That’s a numeric response – one for which you can calculate the mean blood sugar level for each medicine.

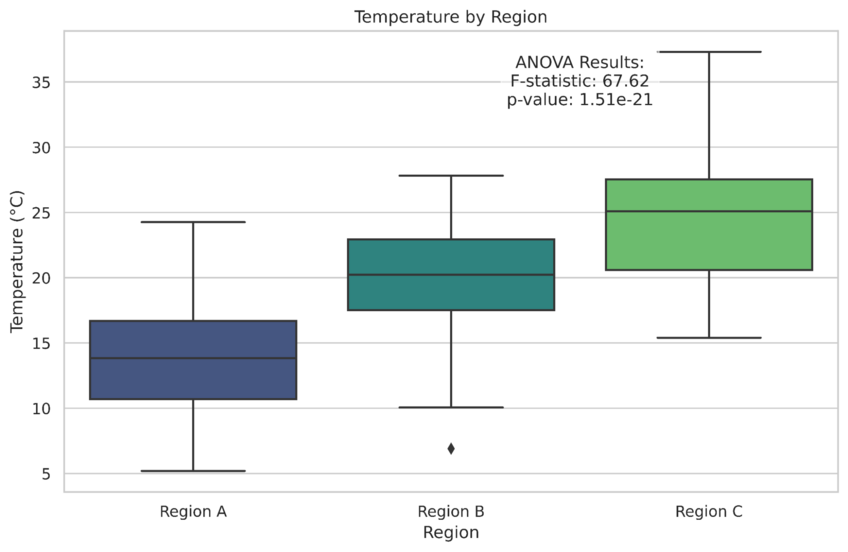

Using ANOVA, you can then compare the means of each medicine tested to find variance, if any exists. Specifically, the formula’s outcome is called the “F Statistic,” which is a ratio that demonstrates any differences that exist between the “within group” variance and the “between group” variance. The figure you receive allows you to reach a conclusion – is your null hypothesis supported or not? It’s not when there is a large variance.

ANOVA Formula

The basic ANOVA formula is F = MST/MSE, with the following representations:

- F – The ANOVA coefficient

- MST – The mean sum of the squares due to treatment

- MSE – The mean sum of the squares due to error

However, several variations exist, typically depending on how many factors influence your statistical model. You may have to account for blocking variables, nested factors, and effects both fixed and random – all can impact your formula, as well as your decision to use one-way or two-way ANOVA.

ANOVA Terminology

To better understand ANOVA, it’s important to know the terminology related to this data analysis tool. The following are key terms to learn:

- Null Hypothesis: Null hypothesis occurs when there is no difference between any of the groups of means you test using ANOVA. If you get a result of 1 from the above formula, that usually means your null hypothesis was accepted – there is no variance between the datasets.

- Alternative Hypothesis: When there is a significant difference between the groups and means – or you believe there will be a difference – you have an alternative hypothesis.

- Dependent Variable: If you’re measuring an item that’s affected by at least one independent variable, you have a dependent variable.

- Independent Variable: All independent variables have the capacity to affect the dependent variable but aren’t affected themselves by other variables.

- Factor: Any independent variable is dubbed a “factor,” because it plays a role in affecting the dependent variable.

- Level: Linked to independent variables, the level outlines the various values of the independent variables you’ll use in an experiment.

One-Way vs. Two-Way ANOVA

Complicating matters further is the fact that there are two types of ANOVA tests – one-way and two-way. Each has a specific use for the analysis of variance, making it crucial that you understand which is relevant to your specific statistical test.

One-Way

Also known as a “simple ANOVA” or “single-factor ANOVA,” a one-way ANOVA is carried out when you only have one independent variable affecting your dependent variable. However, that singular independent variable must have at least two levels. For instance, measuring change over 12 months gives you 12 levels – one for each month of the year.

In a one-way ANOVA, you make several assumptions:

- Variance: Any variance measured should be comparable across all different experiment groups.

- Independence: The dependent variable you derive from one observation will always be independent of the values you derive from other observations.

- Normalcy: The dependent variable’s distribution is distributed normally, meaning the distribution will appear as a bell curve when graphed out.

- Continuous: Your dependent variable is measurable on a scale that you can subdivide.

Two-Way

Whenever you have more than one independent variable affecting your dependent variable, you must use two-way ANOVA, which is also called “full factorial ANOVA.” Complicating things further, each of these variables – or factors – can have several levels.

For example, imagine you measure how many flowers are in a garden for each month of the year. That would require a one-way ANOVA. However, you also choose to measure other variables, such as hours exposed to sunlight each month, hours exposed to rain, temperature, and so on. These are all extra factors, requiring the two-way ANOVA test.

Similar to one-way ANOVA, two-way ANOVA requires assumptions related to variance, independence, normalcy, and the continuous nature of the dependent variable. The major difference comes in categories – each factor should belong to its own category or group.

What ANOVA Reveals & Why You Should Use It

On the surface, it may appear there is little point in using ANOVA. You can see differences in statistical significance between means just by looking at them, so why use a formula at all? But that assumes there’s no sampling error in your datasets.

ANOVA reveals whether the difference in your mean values is truly statistically significant, as well as where an independent variable is affecting your dependent variable. You will also be able to see if any differences you find result from a sampling error, or if one of your independent variables is actually a significant factor in your results.

Make Statistical Analysis Simple with Julius AI

The challenge with ANOVA tests is that the more independent variables you introduce, the tougher it is to track your numbers accurately. That’s where computational AI comes in. With Julius AI, you’re able to quickly analyze large datasets – using either one-way or two-way ANOVA – to not only gain insights from your data but to visualize those insights graphically.

It’s simple and straightforward – you provide a dataset and instruct Julius to conduct an ANOVA analysis. It does the rest of the work for you, giving you a full breakdown of your results. Sign up today and unleash the power of computational AI in your statistical analysis of data.

Frequently Asked Questions (FAQs)

How to explain ANOVA results?

ANOVA results are typically explained by examining the F Statistic and the associated p-value. If the p-value is less than your chosen significance level (e.g., 0.05), it indicates that at least one group mean is significantly different, suggesting a statistically significant variance between the datasets.

Is ANOVA used for qualitative or quantitative?

ANOVA is used for analyzing quantitative data, specifically the differences in means of a numeric response variable across groups defined by categorical (qualitative) independent variables. It evaluates whether the variations between these groups are statistically significant.

How to tell if ANOVA is significant?

ANOVA is significant if the p-value from the test is lower than the predetermined significance level (commonly 0.05). This means you can reject the null hypothesis, indicating that the differences between group means are unlikely to have occurred by random chance.